We propose a pre-training strategy called Multi-modal Multi-task Masked Autoencoders (MultiMAE). It differs from standard Masked Autoencoding in two key aspects:

We make use of masking (across image patches and input modalities) to make training MultiMAE tractable as well as to ensure cross-modality predictive coding is indeed learned by the network. We show this pre-training strategy leads to a flexible, simple, and efficient framework with improved transfer results to downstream tasks. In particular, the same exact pre-trained network can be flexibly used when additional information besides RGB images is available or when no information other than RGB is available – in all configurations yielding competitive to or significantly better results than the baselines. To avoid needing training datasets with multiple modalities and annotated tasks, we train MultiMAE entirely using pseudo labeling, which makes framework widely applicable to any RGB dataset.

The experiments are performed on multiple transfer tasks (image classification, semantic segmentation, depth estimation) and datasets (ImageNet, ADE20K, Taskonomy, Hypersim, NYUv2). The results show an intriguingly impressive capability by the model in cross-modal/task predictive coding and transfer. Please see our Github repository for code and pre-trained models.

Standard Masked Autoencoding is an effective pre-training strategy, but so far has been limited to a single modality, namely RGB images. Practically speaking, often more than just a single modality is available (either from sensors or pseudo labeled), and pre-training strategies should be able to optionally make use of them. In addition, pre-training on more than just a single task is a powerful tool to steer what representations a model will learn and has been shown to improve performance on transfer tasks.

The MultiMAE pre-training objective is to reconstruct masked-out patches of multiple modalities. To do that, a small set of visible patches is sampled from all modalities and processed jointly using a Transformer encoder. Task-specific decoders reconstruct the masked-out patches by first performing a single cross-attention step from queries to all encoded tokens, followed by a shallow Transformer. The queries consist of mask tokens (in gray), with the task-specific encoded tokens added at their respective positions. By keeping the number of encoded patches constant, the bulk of the computational cost does not scale with the number of modalities, making MultiMAE a highly efficient pre-training method.

We pre-train MultiMAE on two additional tasks besides RGB images, namely scene depth and semantic segmentation. Using powerful pre-trained networks, we pseudo label the entire ImageNet-1K dataset with these tasks. This has the advantage that no aligned multi-task dataset is required – all one needs is a large unlabeled RGB dataset and off-the-shelf pseudo labeling networks.

During pre-training, MultiMAE is always given the same number of visible input tokens, but they are sampled randomly across the different modalities using a symmetric Dirichlet distribution. Explore in this interactive demo, how different random mask samples affect the MultiMAE predictions. Use the and buttons to cycle through different images and the button to sample new random masks. Note that since we only compute the loss on the masked-out regions, we overlay the input patches on the predictions.

(Demos are best viewed in the Chrome browser on desktop.)

Masked inputs

MultiMAE predictions

Original reference

RGB

Depth

Semantic

How does the percentage of masked patches affect MultiMAE predictions? Use the slider to choose how many patches are given as input to the MultiMAE model.

Masked inputs

MultiMAE predictions

Original reference

RGB

Depth

Semantic

Hint: Drag the slider to change the number of masked tokens.

In the RGB-only transfer case (left part of figure), the encoder of a pre-trained MultiMAE can directly be used as a ViT with a newly initialized decoder head for the given downstream task. Since MultiMAE was pre-trained on more than just RGB, it can optionally accept additional modalities at transfer time (right part of figure).

MultiMAE is an effective pre-training strategy even when RGB is the only available downstream modality. It retains the benefits of MAE, an already powerful pre-training strategy, and can sometimes even outperform it. Notice how MAE and MultiMAE can both significantly surpass supervised ImageNet-1K pre-training.

| Method | Arch. | Classification (Top 1 acc. ↑) |

Semantic Segmentation (mIoU ↑) |

Depth (δ1 ↑) |

||

|---|---|---|---|---|---|---|

| ImageNet-1K | ADE20K |

Hypersim | NYUv2 | NYUv2 | ||

| Supervised (DeiT) | ViT-B | 81.8 | 45.8 | 33.9 | 50.1 | 80.7 |

| MAE | ViT-B | 83.3 | 46.2 | 36.5 | 50.8 | 85.1 |

| MultiMAE | ViT-B | 83.3 | 46.2 | 37.0 | 52.0 | 86.4 |

Making use of additionally available modalities during fine-tuning has the potential to significantly increase performance. On NYUv2 and Hypersim semantic segmentation, using either RGB-only or depth-only inputs works well, but when combining the two, we see large increases in performance – especially so when the ground-truth depth is of high quality, as in Hypersim.

In case ground truth depth is not available at transfer time, MultiMAE can also make use of pseudo labeled depth (and even pseudo labeled semantic segmentation 😉 ). Here, pD = pseudo depth, and pS = pseudo semantic segmentation. While the improvement is not as large as with ground-truth depth, adding pseudo labeled depth and semantic segmentation still provides a slight performance increase.

MultiMAE demonstrates intriguing capabilities for cross-modal predictive coding since not only was it trained to predict each modality from any other modality, but also to combine information from multiple modalities. In the following interactive demonstrations, you can explore some of these capabilities.

How do MultiMAE predictions change if you give it more RGB patches than depth patches, or even only semantic information? In this interactive demo, you can use the slider to change the proportion of visible tokens per modality. Notice how semantically stable the predictions stay as tokens are completely removed from certain modalities and added to others, suggesting that MultiMAE has learned a shared representation for these modalities.

Masked inputs

MultiMAE predictions

Original reference

RGB

Depth

Semantic

Hint: Drag the slider to change the ratio of visible tokens per modality.

In this demo, we give as input the full depth map and two RGB patches, and we show the full reconstructed RGB image. No semantic maps are given as input. Using the slider, you can change the hue of a single RGB patch, while the other is locked. Notice how only the color of the selected objects change, while the rest stays constant. MultiMAE effectively propagates color information from two RGB patches to only the relevant objects in the image, which it extracts from the depth map. In the images showing fruit and vegetables, color information is not only propagated to the same object, but to all objects that MultiMAE implicitly assigns to the same semantic class – even over larger distances.

Full depth input

Masked RGB input

RGB reconstruction

Original RGB reference

Hint: Drag the slider to change the hue of only a single RGB patch. Use the buttons to explore different images.

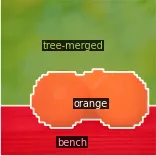

Similar to the above demonstration, we now again give a full depth map, but this time change the hue of the three middle RGB patches. Depending on the given color, MultiMAE predicts the objects as either oranges, apples, or sports balls.

Full depth input

Masked RGB input

Semantic reconstruction

Orig. semantic reference

Hint: Drag the slider to change the hue of the central three RGB patches.

Let's see how MultiMAE reconstructs incomplete RGB inputs given full semantic segmentation maps. When editing the "road" class to "grass" or "snow", the RGB predictions change accordingly. Notice how even the trees in the background are much greener when there is no snow in the semantic segmentation inputs, even though their class label is the same for all examples!

Masked RGB input

Semantic input

RGB reconstruction

Original RGB reference

Hint: Use the buttons to explore different semantic edits.

@article{bachmann2022multimae,

author = {Roman Bachmann and David Mizrahi and Andrei Atanov and Amir Zamir},

title = {{MultiMAE}: Multi-modal Multi-task Masked Autoencoders},

journal = {arXiv preprint arXiv:2204.01678},

year = {2022},

}